Alexander Karpekov

PhD Student in Computer Science. Data Scientist. Explorer.

PhD Student in Computer Science. Data Scientist. Explorer.

Hi there 👋🏻 My name is Alexander. I am working on my PhD in Computer Science at Georgia Tech, advised by Sonia Chernova and Thomas Plötz .

After spending a decade in the industry, working on breaking news discovery at Dataminr, and Search and YouTube Music recommendations at Google, I returned to academia to focus on Machine Learning and AI research. I’m passionate about Explainable AI, pattern discovery in large datasets, and interactive data visualization.

Outside of school, I enjoy snowboarding (calling Silverthorne, Colorado my second home), rock climbing (an aspiring lead belayer), and rowing (GT Crew). I like studying foreign languages and learning about new cultures. One day, I hope to learn how to play piano.

I am always open to collaborations — feel free to get in touch!

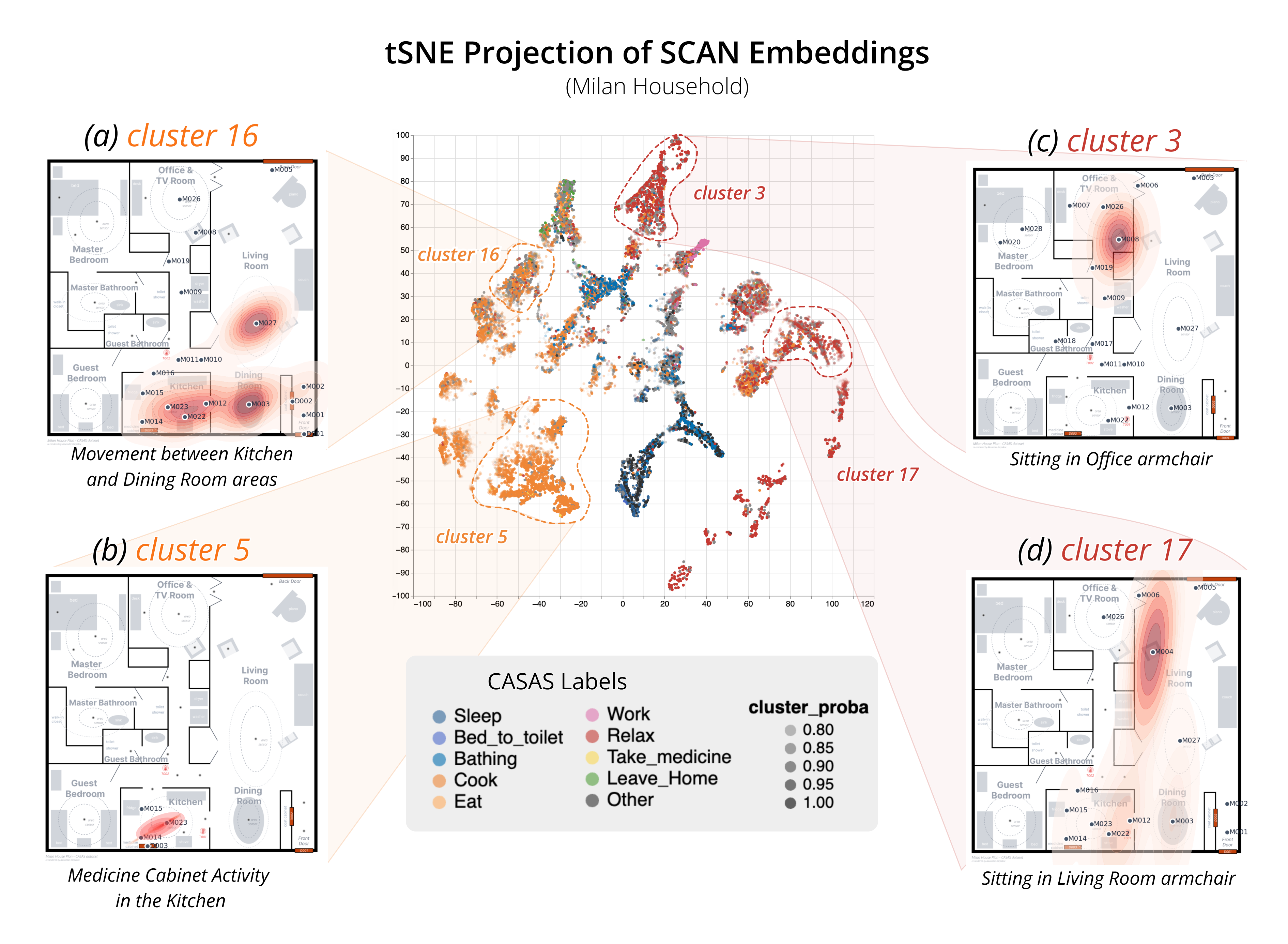

In this paper we introduce DISCOVER, an active-learning method to identify fine-grained human activities from unlabeled smart home sensor data. DISCOVER combines self-supervised feature extraction and embedding clustering with a custom built visualization tool, which allows researchers to identify, label, and track human activities and changes over time.

An interactive visualization tool that helps users understand how transformer models work through hands-on experimentation and real-time feedback.

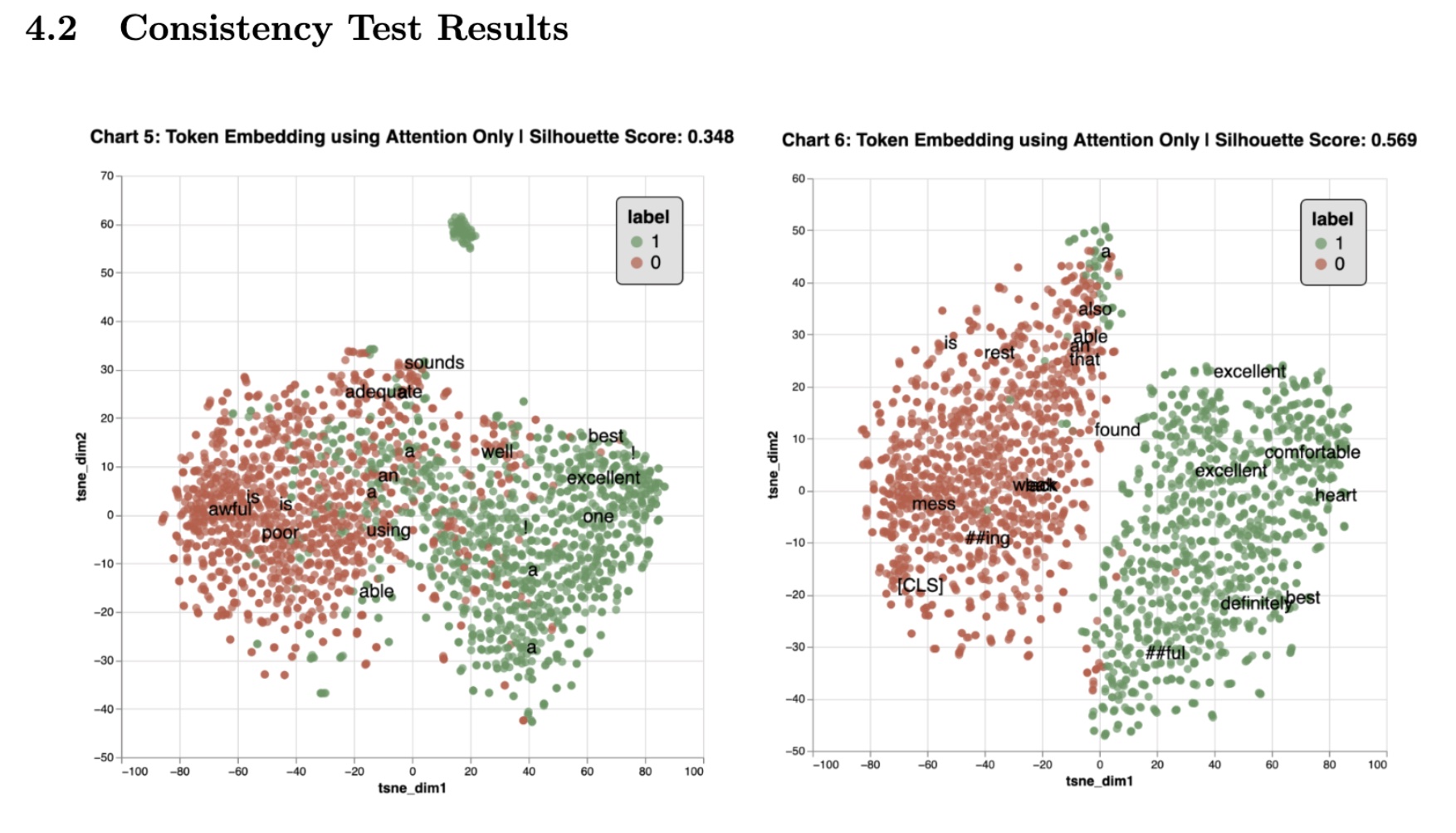

This project investigates the use of Transformer attention weights for deriving feature importance in NLP tasks, demonstrating that combining attention weights with gradient information improves explainability and providing an open-source GitHub tool for applying this method to any Transformer model.

This project examines Guangdong's shifting economic growth using Night Lights data from satelites, focusing on development beyond the Pearl River Delta and the impact of 2008 government policies.